A conversational system with flexibility will require an

intelligent control system. The usual linear system x[k+1] := A*x[k]+B*i[k]

can be fashioned into the usual control system, but that is less flexible than

I would like the conversational system to be.

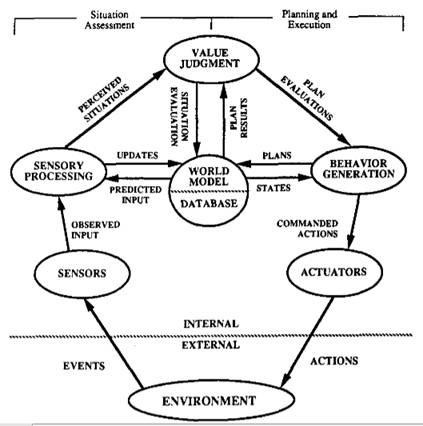

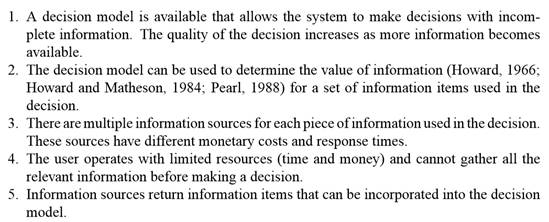

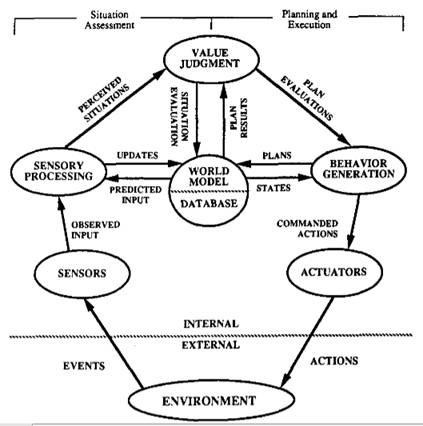

Here is an adaptation of linear systems to intelligent

systems by adding a value judgment (VJ) subsystem to it. The whole progression

from standard linear system to value based system is shown at:

https://en.wikipedia.org/wiki/Real-time_Control_System

The so called RCS-4 version, the recommended intelligent

control system, is described on that link, as summarized in overview here:

Value state-variables define

what goals are important and what objects or regions should be attended to,

attacked, defended, assisted, or otherwise acted upon. Value judgments, or

evaluation functions, are an essential part of any form of planning or

learning. The application of value judgments to intelligent control systems has

been addressed by George Pugh.[16]

The structure and function of VJ modules are developed more completely

developed in Albus (1991).[2][17]

The Pugh reference is to RCS-4 concepts, while there is

another Albus reference which includes figure 1 as the architecture overview

below:

That architecture diagram is from:

"Outline for a Theory of Intelligence", which

is in free PDF below:

ftp://calhau.dca.fee.unicamp.br/pub/docs/ia005/Albus-outline.pdf

The value judgment (VJ) module appears to steer the logic

behind the Planning and Execution side of the diagram, at the

whims of the World Model Database, while the old standby Situation

Assessment side figures out what can possibly be thought, while the VJ

module appears to figure out which thoughts work best of those available, and

the planning and execution model decides what to focus on, schedule and do.

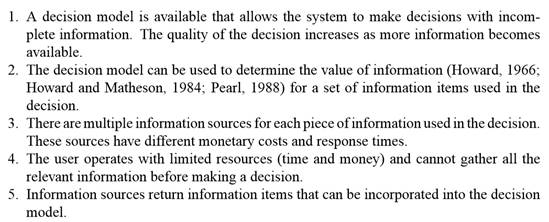

A paper titled "A Value Driven System for Autonomous

Information Gathering" is here:

http://rbr.cs.umass.edu/papers/GZjiis00.pdf

Here is a fast summary of

that paper's content:

And finally, there needs

to be a script of textual utterances, with patterns to be matched against variable

bindings. Each node in the DAG should also have a slot for some function

capable of estimating the value of each utterance to each goal, and the duration

and cost of each utterance to utter and process.

That is where the DAG

comes in, IMHO. Every statement that is matched would have follow on

questions to ask, together with a new set of expected patterns to be matched.

The highest value, lowest cost question designed to elicit an answer previously

unknown and undeducable from the current world model would be one way to choose

the next question.

But variation helps make

the utterances more interesting. So each node in the conversation DAG should

be a possible child of a branching node which has both possible utterances as

children, perhaps many more nodes, each with a possible next conversation move.

Reviews of the discourse

representation systems (DRS), especially Kamp's should help interested readers (like

myself) to wrap some meat around those bones, so here is a reference to Kamp's

work I found in PDF form:

http://www.ims.uni-stuttgart.de/institut/mitarbeiter/uwe/Papers/DRT.pdf

Does anyone have

references to a text generation paper they especially like?

Sincerely,

Rich

Cooper,

Rich Cooper,

Chief Technology Officer,

MetaSemantics Corporation

MetaSemantics AT EnglishLogicKernel DOT com

( 9 4 9 ) 5 2 5-5 7 1 2

http://www.EnglishLogicKernel.com

-----Original Message-----

From: ontolog-forum-bounces@xxxxxxxxxxxxxxxx

[mailto:ontolog-forum-bounces@xxxxxxxxxxxxxxxx] On Behalf Of John F Sowa

Sent: Tuesday, July 14, 2015 8:35 PM

To: ontolog-forum@xxxxxxxxxxxxxxxx

Subject: Re: [ontolog-forum] Ontology based conversational interfaces

Rich and Tom,

1983 was one of the "boom times" in the

boom-and-bust cycle of AI.

That's when AI researchers were getting LISP machines and

high-end workstations. There was a lot of optimism about getting truly

intelligent systems. The Cyc project was started in 1984 with a 10-year

plan to solve all the problems.

RC

> a free pdf about discourse and conversational

analysis:

>

https://abudira.files.wordpress.com/2012/02/discourse-analysis-by-gill

> ian-brown-george-yule.pdf

TJ

> I think it's definitely worth a read, although,

being published in

> 1983, most of its value probably lies in documenting

the history of

> discourse analysis...

That book does a good job of surveying the complex issues

about the semantics of natural language and the many, many ways that language

is related to context, speakers, presuppositions, etc.

And they also show the huge number of reasons why we

still do not have computer systems today that can understand natural language.

For just one of the many reasons why formal systems for

NLP have failed, look at page 80 of that book (if you're using the Adobe

reader, it's p. 47):

> In this approach, each participant in a discourse

has a presupposition

> pool and his pool is added to as the discourse

proceeds. Each

> participant also behaves as if there exists only one

presupposition

> pool shared by all participants in the

discourse. Venneman emphasizes

> that this is true in 'a normal, honest discourse'.

The last line is a typical method for dismissing all the

hard parts.

The authors of the book recognize and discuss the many

complex issues involved in that assumption. Unfortunately, what Venneman

calls "a normal, honest discourse" rarely, if ever, exists -- I don't

believe that the terms 'normal' or 'honest' are appropriate.

Unfortunately, the boom years of the 1980s were followed

by a typical bust, when people realized that language understanding is much

harder than anybody in realized. I often quote Alan Perlis:

"A year spent working in artificial intelligence is

enough to make one believe in God."

Those issues are the theme of a talk I presented last

year on "Why has AI failed? And how can it succeed?"

http://www.jfsowa.com/talks/micai.pdf

John

_________________________________________________________________

Message Archives:

http://ontolog.cim3.net/forum/ontolog-forum/

Config Subscr:

http://ontolog.cim3.net/mailman/listinfo/ontolog-forum/

Unsubscribe: mailto:ontolog-forum-leave@xxxxxxxxxxxxxxxx

Shared Files: http://ontolog.cim3.net/file/ Community

Wiki: http://ontolog.cim3.net/wiki/ To join: http://ontolog.cim3.net/cgi-bin/wiki.pl?WikiHomePage#nid1J