Thank you, Rich, much appreciated.

This does feel “revolutionary” to me – and yes, still raw and unproven/untested, and a bit of a tough row to hoe, because this approach kind of stands the industry on its head, and asserts the primacy of top-down definition – but this thesis – that word meaning is best and “most scientifically” and indeed “empirically” understood as stipulative and intentional in the specific context of actual usage – rather than something to be inferred from a huge pool of empirical samples, or grounded in something Platonic and absolute – feels to me like a gateway into a explosive and more reliably accurate new kind of ontology.

This is how it actually works, and this is what we are doing all the time when we want to get clear on something under discussion.

So – the actual act of communication takes place just as you diagram. The speaker offers some intended abstractions, the listener has to parse and interpret those abstractions, questions arise in the mind of the listener, the listener challenges the speaker – and from the infinite fluidity of possible interpretations of the abstraction (as per “Sowa’s Law”) – the speaker makes particular and “motivated” choices and assigns an increasingly specific value to the dimension or range of value in question.

My own instinct is – mathematical analysis has not yet really succeeded in analyzing the process of abstraction.

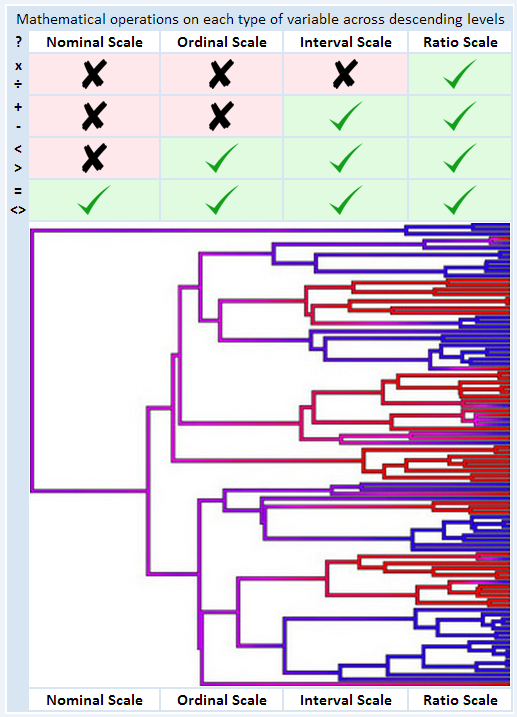

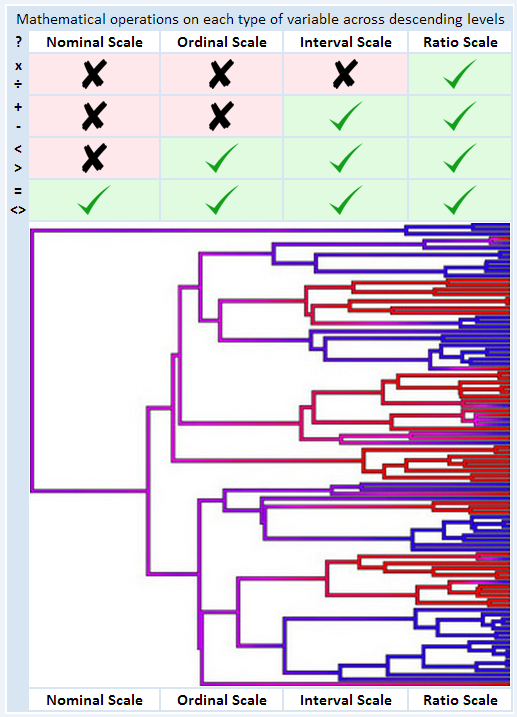

The dimensions in the process are seen by most working scientists and mathematicians as divided into two major areas – “quantitative” (numerical dimensions in linear units) and “qualitative” (inherently confusing and beyond any possibility of analysis, so don’t mess with it).

But this is not the strongest way to see it, as many researchers working in social sciences or psychology know. There are ways to “dimension” the qualitative levels of abstraction – and semantic ontologists ought to be doing this (imho).

The industry standard on this issue was generally defined by Stanley Smith Stevens

http://en.wikipedia.org/wiki/Stanley_Smith_Stevens

And his “levels of measurement” theory http://en.wikipedia.org/wiki/Level_of_measurement is admired by some, and seen as controversial by others (it looks to me like the Wikipedia article has been most recently edited by one of the doubters, because the basics of my below image were on the Wikipedia page a year or two ago).

Stevens divides these levels of scale into “ratio”, “interval” “ordinal” and “nominal” – where “ratio” supports all basic arithmetic operations (lowest level, quantitative) and “nominal” supports <>

If we empower the concept of stipulative definition – arguably the best and most authentically “empirical” definition of “where meaning comes from” -- we can then assert the possibility of an absolute linear cascade of precise definition from the highest levels of abstraction (nominal or ordinal) to the lowest level (ratio) – somewhere down near the real number line or the continuum – the “reality” in the “reality is continuous, concepts are discrete” model.

Seen this way, every abstraction can be grounded in floating point numbers (on the right side of the diagram) to “any desired degree of specificity” – just by parsing the dimensional cascade through a cycle of converging iterations.

This does get a little tricky, but under stipulation each level can be assigned an intentional value by the speaker – creating an absolutely linear top-down dimensional cascade to the empirical ground of measurement.

Because all of this looks correct to me, I tend to see all of language as “intentional dimensional parsing within a universal space” that looks more or less like this cascade, where all that (implicit or composite) dimensionality is actually assembled from the lowest-level mathematical unit in the entire process – which is “distinction” or “cut”.

So, everything in this framework is “assembled from cuts (distinctions)” – and a hierarchy of abstraction is a fractal cascade of intentionally-motivated cuts, customized by a speaker in an ad hoc way for one instant of communication. It’s not something I can quite (yet?) prove – but it looks to me like this framework contains the entire bounded range of all semantic construction, in one linear/taxonomic cascade. The reason it works – is because we recognize and empower stipulation as the authentic ground of definition.

What we need to set fire to this so-called revolution – is a clear linear proof of how distinction – a cut in something – is replicated across the levels. The guiding mantra is “a concept is a cut on a cut on a cut on a cut on a cut on a cut on a cut…” and that’s why it’s “fractal”. The top of the cascade is something like “universal oneness” or “the absolute container” or maybe just “one”, and the bottom of the cascade is “the infinitesimal” – with every possible particular parsing of semantic space being some instance within this range of values.

So -- what is the ontology of “a cut on a cut” – a distinction made on a distinction? How can we define a “cut on a cut” as our absolute ground without going into an infinite loop (I am reminded that Georg Cantor spent time in mental hospitals)? But that’s what taxonomies are doing – “dogs” are a cut on “mammals” and “Labradors” are a cut on “dogs”. See this in absolutely stipulative terms, and all the dimensional confusions that frustrated Wittgenstein go away…

Why should words – which are just heuristic conveniences serving immediate human needs, taking the exact shape and meaning that some human intends at some particular moment – have any sort of absolute Platonic definition? It’s a fantasy. “Get over it.”

I suspect even the issue of “faceted classification” in library science can be melted by something like this approach.

http://en.wikipedia.org/wiki/Faceted_classification

Let it all be ad hoc and context-specific, and stipulate the facets in all their nuance and intentional particularity – and the human race moves into an entirely new era of freedom and fluency and understanding….

J

Bruce Schuman

FOCALPOINT: http://focalpoint.us

NETWORK NATION: http://networknation.net

SHARED PURPOSE: http://sharedpurpose.net

INTERSPIRIT: http://interspirit.net

(805) 966-9515, PO Box 23346, Santa Barbara CA 93101

From: ontolog-forum-bounces@xxxxxxxxxxxxxxxx [mailto:ontolog-forum-bounces@xxxxxxxxxxxxxxxx] On Behalf Of Rich Cooper

Sent: Thursday, October 23, 2014 1:59 PM

To: '[ontolog-forum] '

Subject: Re: [ontolog-forum] "Data/digital Object" Identities

Bruce Schuman wrote:

the principle of context-specific stipulative definition in the actual/empirical context of usage -- intentionally parsing reality in some specific act of communication, such that “the words" used in that act of communication most authentically have "the meaning" intended by the speaker -- and it is up to the speaker to defend that meaning by "dimensioning" the specific values within the implicit undefined abstractions of the statement. This is how human beings actually communicate – and when the “dialog” or drill-down Q and A does not narrow the bounded ranges of possible interpretive confusions, we have failure of communication and fights.

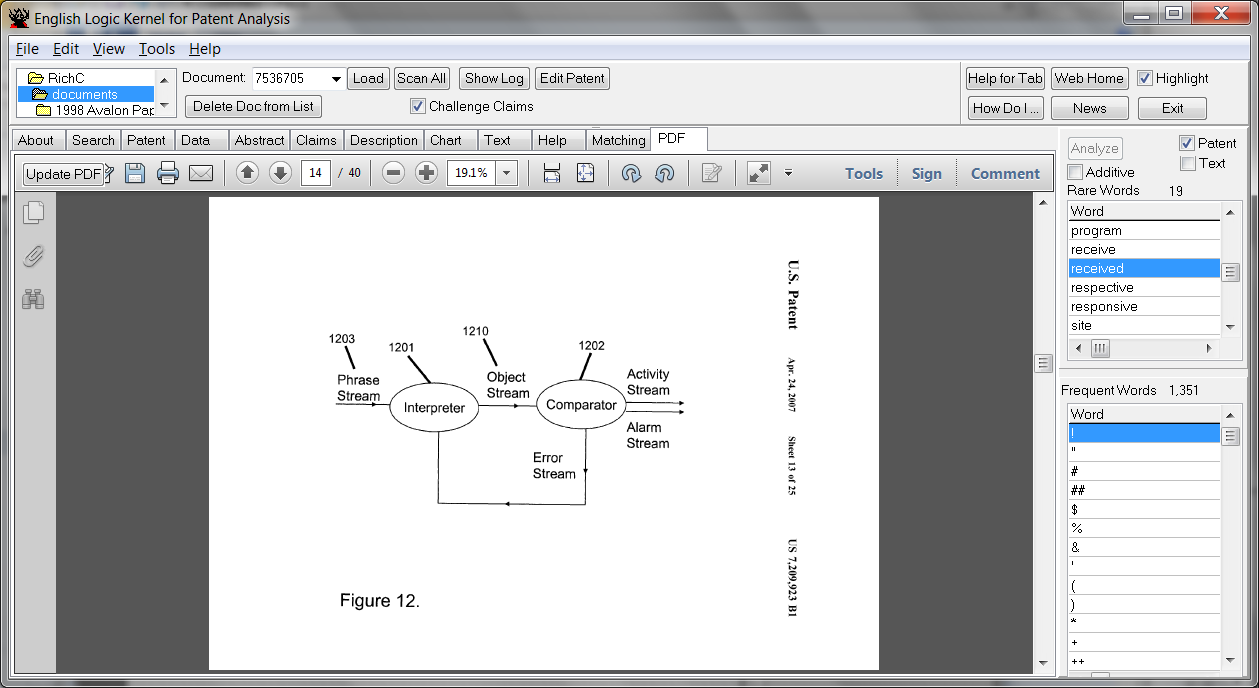

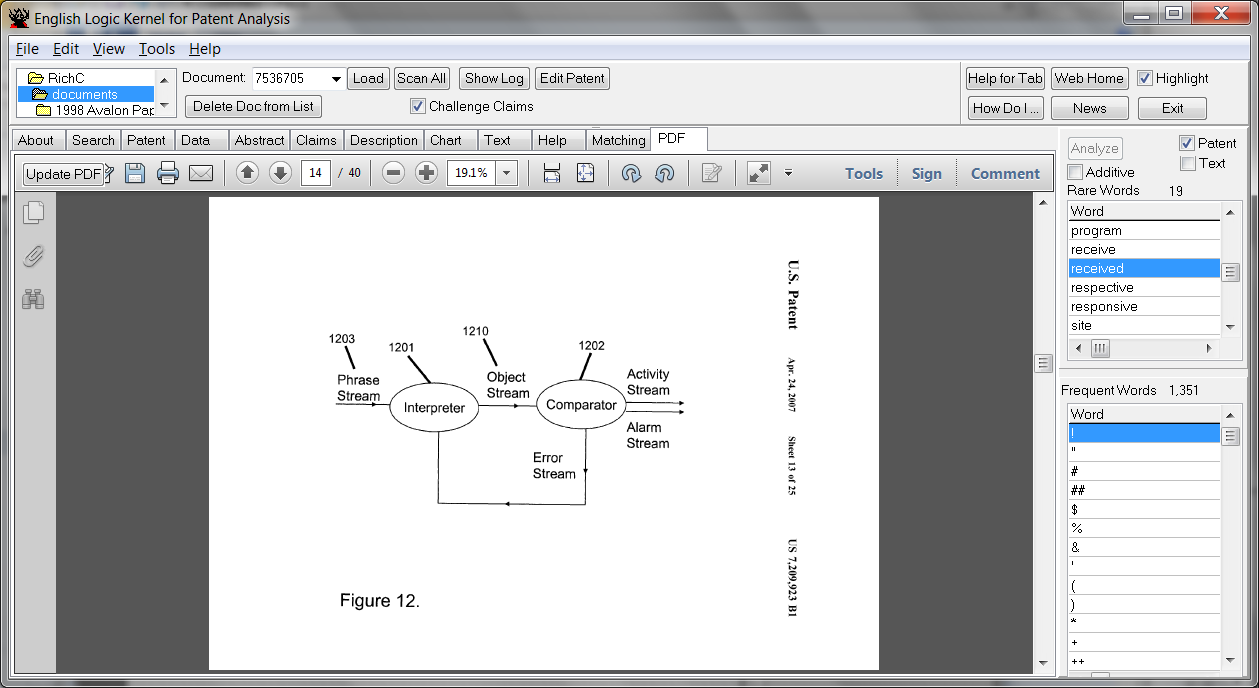

Absolutely agree. Each item of conversation has a place in a description which the sender wants the receiver to understand. However, the receiver will generate her own understanding of the item, and of the entire conversation, from her (receiver’s) viewpoint. So even though the sender wants to get a message X across to the receiver, the receiver gets her own message, interprets it for her own purposes, and the iterative nature of the conversation has a feedback effect as shown below:

The figure shows figure 12 of my patent, which emphasizes that both sender and receiver cooperate in question and answering iterations in normal conversation. So a program that is designed to converse with people should be able to approach the conversational task that way.

-Rich

Sincerely,

Rich Cooper

EnglishLogicKernel.com

Rich AT EnglishLogicKernel DOT com

9 4 9 \ 5 2 5 - 5 7 1 2

JS:

Yes. The hope that identity conditions can clarify ontological issues comes from the illusory simplicity of the '=' symbol in mathematics.

But the only reason why identity in math is well defined is that mathematicians have total control over the theories they specify.

But the real world is beyond our control. We can observe it, but we can't dictate how it should operate. We can observe similarities and differences. But identity about any physical things of any kind is *always* an inference. Any ontology that takes identity as a well-defined primitive is making an unwarranted assumption.

It seems this principle has to be echoed over and over again. Since I first heard this idea about 1984, I am perversely inclined to call it "Sowa's Law" -- which is "Reality is continuous, concepts are discrete" ("deal with it"). But it's reinforced from many perspectives, and one that helped open my eyes to this theme was the awesome "Programming Languages, Information Structure, and Machine Organization", by Peter Wegner (1968), which goes over all the levels and layers of information mapping, layers of coding, layers of languages, layers of machine structure, all interconnected. I was already inclined this way for a lot of reasons, but this comprehensive mapping of the information business further convinced me that in any kind of science, we are not actually studying "reality" -- we are studying the properties of our maps, and then testing the correlation between the map and the reality, and hoping for convergence -- knowing that there will always be some error, and trying to minimize that error -- some "bounded range of uncertainty" -- so that all measurements should be understood in a finite number of decimal places, and the "actual value" is trapped between two decimal places (this is the “discrete/continuous” mystery). Since the decimal-place system is inherently a hierarchical taxonomy of cuts in the real number line, every model of reality defined in numbers is about "acceptable error tolerance" for some purpose. A lot of successful human communication is about negotiating this acceptable error tolerance -- knowing that we can't get it "perfect" ("because reality isn't like that"), but that we can drive this discussion with drill-down questions until everything we need to talk about is within "acceptable boundary values" and "we agree" (within error tolerances acceptable to both parties).

I haven't actually seen the Wegner book in a long time, but I just ordered a used copy from Amazon this morning, and found this list of Wegner publications, that absolutely looks like my kind of thing:

http://www.amazon.com/Peter-Wegner/e/B00J1T3QXE/ref=ntt_dp_epwbk_0

One of these days, I'll take another crack at the core thesis for a general ontology, which is that all these interconnected structures can be 100% linearly assembled using ONE primitive mathematical element -- which is: distinction. Telling two things apart, by making a "cut" in some dimension of similarity we discern (this is essentially a modern take on Linnaeus). So, the thesis is: every concept in reality is a hierarchical assembly of motivated cuts organized in a kind of fractal cascade across levels of abstraction, from broad generalizations to specific boundary values in specific dimensions. This works, and is true, because the most authentic empirical foundation for word meaning is not some huge shared database of "6 million word senses" (though we may need such things for machine translation of natural language, and we need a common pool of shared if approximate word meanings), but the principle of context-specific stipulative definition in the actual/empirical context of usage -- intentionally parsing reality in some specific act of communication, such that “the words" used in that act of communication most authentically have "the meaning" intended by the speaker -- and it is up to the speaker to defend that meaning by "dimensioning" the specific values within the implicit undefined abstractions of the statement. This is how human beings actually communicate – and when the “dialog” or drill-down Q and A does not narrow the bounded ranges of possible interpretive confusions, we have failure of communication and fights.

This is why people hire lawyers when writing contracts….

Bruce Schuman

FOCALPOINT: http://focalpoint.us

NETWORK NATION: http://networknation.net

SHARED PURPOSE: http://sharedpurpose.net

INTERSPIRIT: http://interspirit.net

(805) 966-9515, PO Box 23346, Santa Barbara CA 93101

-----Original Message-----

From: ontolog-forum-bounces@xxxxxxxxxxxxxxxx [mailto:ontolog-forum-bounces@xxxxxxxxxxxxxxxx] On Behalf Of John F Sowa

Sent: Thursday, October 23, 2014 12:09 AM

To: ontolog-forum@xxxxxxxxxxxxxxxx

Subject: Re: [ontolog-forum] "Data/digital Object" Identities

Hans, Jack, William, Pat,

The belief that identity conditions will magically solve issues about ontology creates a false sense of security -- and a huge number of bugs.

HP

> a principle called “Entity Primacy”, which basically states that

> whatever identity you might assign to an entity/object, it has other

> identities in other, usually collective, frames of reference.

JP

> I think it is correct to argue that there are many different ways in

> which some entity is identified by different individuals

WF

> 'identity' is a kind of a very ghostly abstraction without much mooring.

Yes. The hope that identity conditions can clarify ontological issues comes from the illusory simplicity of the '=' symbol in mathematics.

But the only reason why identity in math is well defined is that mathematicians have total control over the theories they specify.

But the real world is beyond our control. We can observe it, but we can't dictate how it should operate. We can observe similarities and differences. But identity about any physical things of any kind is *always* an inference. Any ontology that takes identity as a well-defined primitive is making an unwarrented assumption.

WF

>> Is a data object in one format the same as a data object in a

>> different format or a different one? The bit streams can change but

>> the original identity might be considered the same." This applies to

>> *all* human artifacts. When is Moby Dick the 'same' book?

PH

> It applies to everything, natural or artificial. When is Iceland the

> same island? How many lakes are there in Norway? It is endemic in the

> way we use language (and probably in how we think about the world.)

I agree. But I would add that the reason why our languages and ways

of thinking are so flexible is that they have adapted to the very changeable world. If we want our ontologies to adapt to the world, they will have to become as flexible.

PH

> In some ways the digital/semantic technologies are making this worse.

> Ontologies impose artificial identity conditions of their own, which

> can clash both with other ontologies and with human intuitions.

I strongly agree.

PH

> For example, the widely popular provenance ontology

> http://www.w3.org/TR/prov-primer/ views every change as producing a

> new entity, so changing a tire on a car gives you a new car.

Philosophers have a good phrase for the authors of such documents:

They are "in the grip of a theory". They sit in their armchairs and dream up theories without any input from reality. Unfortunately, they shove their theories down the throats of innocent students.

PH

> the 'oil and gas' (now generalized) ontology ISO 15926, widely used in

> industry, treats everything as what BFO would call an occurrent.

That is the foundation for Whitehead's process ontology: What we call physical objects are slowly changing processes. Whitehead developed that ontology when he was trying to reconcile modern physics with everyday life. Following is an article I presented at a conference on issues about Whitehead and Peirce:

http://www.jfsowa.com/pubs/signproc.htm

John

_________________________________________________________________

Message Archives: http://ontolog.cim3.net/forum/ontolog-forum/

Config Subscr: http://ontolog.cim3.net/mailman/listinfo/ontolog-forum/

Unsubscribe: mailto:ontolog-forum-leave@xxxxxxxxxxxxxxxx

Shared Files: http://ontolog.cim3.net/file/ Community Wiki: http://ontolog.cim3.net/wiki/ To join: http://ontolog.cim3.net/cgi-bin/wiki.pl?WikiHomePage#nid1J